AI prompts

base on Official Pytorch implementation of StreamV2V. # StreamV2V

[English](./README.md) | [中文](./README-cn.md) | [日本語](./README-ja.md)

**[Looking Backward: Streaming Video-to-Video Translation with Feature Banks](https://jeff-liangf.github.io/projects/streamv2v/)**

<br/>

[Feng Liang](https://jeff-liangf.github.io/),

[Akio Kodaira](https://scholar.google.co.jp/citations?user=15X3cioAAAAJ&hl=en),

[Chenfeng Xu](https://www.chenfengx.com/),

[Masayoshi Tomizuka](https://me.berkeley.edu/people/masayoshi-tomizuka/),

[Kurt Keutzer](https://people.eecs.berkeley.edu/~keutzer/),

[Diana Marculescu](https://www.ece.utexas.edu/people/faculty/diana-marculescu)

<br/>

The International Conference on Learning Representations (ICLR), 2025

[](https://arxiv.org/abs/2405.15757)

[](https://jeff-liangf.github.io/projects/streamv2v/)

[](https://huggingface.co/spaces/JeffLiang/streamv2v)

## Highlight

Our StreamV2V could perform real-time video-2-video translation on one RTX 4090 GPU. Check the [video](https://www.youtube.com/watch?v=k-DmQNjXvxA) and [try it by yourself](./demo_w_camera/README.md)!

[](https://www.youtube.com/watch?v=k-DmQNjXvxA)

For functionality, our StreamV2V supports face swap (e.g., to Elon Musk or Will Smith) and video stylization (e.g., to Claymation or doodle art). Check the [video](https://www.youtube.com/watch?v=N9dx6c8HKBo) and [reproduce the results](./vid2vid/README.md)!

[](https://www.youtube.com/watch?v=N9dx6c8HKBo)

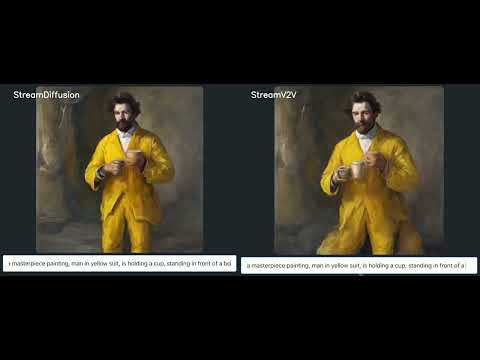

Although StreamV2V is designed for the vid2vid task, it could seamlessly integrate with the txt2img application. Compared with per-image StreamDiffusion, StreamV2V **continuously** generates images from texts, providing a much smoother transition. Check the [video](https://www.youtube.com/watch?v=kFmA0ytcEoA) and [try it by yourself](./demo_continuous_txt2img/README.md)!

[](https://www.youtube.com/watch?v=kFmA0ytcEoA)

## Installation

Please see the [installation guide](./INSTALL.md).

## Getting started

Please see [getting started instruction](./vid2vid/README.md).

## Realtime camera demo on GPU

Please see the [demo with camera guide](./demo_w_camera/README.md).

## Continuous txt2img

Please see the [demo continuous txt2img](./demo_continuous_txt2img/README.md).

## LICENSE

StreamV2V is licensed under a [UT Austin Research LICENSE](./LICENSE).

## Acknowledgements

Our StreamV2V is highly dependended on the open-source community. Our code is copied and adapted from < [StreamDiffusion](https://github.com/cumulo-autumn/StreamDiffusion) with [LCM-LORA](https://huggingface.co/docs/diffusers/main/en/using-diffusers/inference_with_lcm_lora). Besides the base [SD 1.5](https://huggingface.co/runwayml/stable-diffusion-v1-5) model, we also use a variaty of LORAs from [CIVITAI](https://civitai.com/).

## Citing StreamV2V :pray:

If you use StreamV2V in your research or wish to refer to the baseline results published in the paper, please use the following BibTeX entry.

```BibTeX

@article{liang2024looking,

title={Looking Backward: Streaming Video-to-Video Translation with Feature Banks},

author={Liang, Feng and Kodaira, Akio and Xu, Chenfeng and Tomizuka, Masayoshi and Keutzer, Kurt and Marculescu, Diana},

journal={arXiv preprint arXiv:2405.15757},

year={2024}

}

@article{kodaira2023streamdiffusion,

title={StreamDiffusion: A Pipeline-level Solution for Real-time Interactive Generation},

author={Kodaira, Akio and Xu, Chenfeng and Hazama, Toshiki and Yoshimoto, Takanori and Ohno, Kohei and Mitsuhori, Shogo and Sugano, Soichi and Cho, Hanying and Liu, Zhijian and Keutzer, Kurt},

journal={arXiv preprint arXiv:2312.12491},

year={2023}

}

```

## Contributors

<a href="https://github.com/Jeff-LiangF/streamv2v/graphs/contributors">

<img src="https://contrib.rocks/image?repo=Jeff-LiangF/streamv2v" />

</a>", Assign "at most 3 tags" to the expected json: {"id":"10491","tags":[]} "only from the tags list I provide: [{"id":77,"name":"3d"},{"id":89,"name":"agent"},{"id":17,"name":"ai"},{"id":54,"name":"algorithm"},{"id":24,"name":"api"},{"id":44,"name":"authentication"},{"id":3,"name":"aws"},{"id":27,"name":"backend"},{"id":60,"name":"benchmark"},{"id":72,"name":"best-practices"},{"id":39,"name":"bitcoin"},{"id":37,"name":"blockchain"},{"id":1,"name":"blog"},{"id":45,"name":"bundler"},{"id":58,"name":"cache"},{"id":21,"name":"chat"},{"id":49,"name":"cicd"},{"id":4,"name":"cli"},{"id":64,"name":"cloud-native"},{"id":48,"name":"cms"},{"id":61,"name":"compiler"},{"id":68,"name":"containerization"},{"id":92,"name":"crm"},{"id":34,"name":"data"},{"id":47,"name":"database"},{"id":8,"name":"declarative-gui "},{"id":9,"name":"deploy-tool"},{"id":53,"name":"desktop-app"},{"id":6,"name":"dev-exp-lib"},{"id":59,"name":"dev-tool"},{"id":13,"name":"ecommerce"},{"id":26,"name":"editor"},{"id":66,"name":"emulator"},{"id":62,"name":"filesystem"},{"id":80,"name":"finance"},{"id":15,"name":"firmware"},{"id":73,"name":"for-fun"},{"id":2,"name":"framework"},{"id":11,"name":"frontend"},{"id":22,"name":"game"},{"id":81,"name":"game-engine "},{"id":23,"name":"graphql"},{"id":84,"name":"gui"},{"id":91,"name":"http"},{"id":5,"name":"http-client"},{"id":51,"name":"iac"},{"id":30,"name":"ide"},{"id":78,"name":"iot"},{"id":40,"name":"json"},{"id":83,"name":"julian"},{"id":38,"name":"k8s"},{"id":31,"name":"language"},{"id":10,"name":"learning-resource"},{"id":33,"name":"lib"},{"id":41,"name":"linter"},{"id":28,"name":"lms"},{"id":16,"name":"logging"},{"id":76,"name":"low-code"},{"id":90,"name":"message-queue"},{"id":42,"name":"mobile-app"},{"id":18,"name":"monitoring"},{"id":36,"name":"networking"},{"id":7,"name":"node-version"},{"id":55,"name":"nosql"},{"id":57,"name":"observability"},{"id":46,"name":"orm"},{"id":52,"name":"os"},{"id":14,"name":"parser"},{"id":74,"name":"react"},{"id":82,"name":"real-time"},{"id":56,"name":"robot"},{"id":65,"name":"runtime"},{"id":32,"name":"sdk"},{"id":71,"name":"search"},{"id":63,"name":"secrets"},{"id":25,"name":"security"},{"id":85,"name":"server"},{"id":86,"name":"serverless"},{"id":70,"name":"storage"},{"id":75,"name":"system-design"},{"id":79,"name":"terminal"},{"id":29,"name":"testing"},{"id":12,"name":"ui"},{"id":50,"name":"ux"},{"id":88,"name":"video"},{"id":20,"name":"web-app"},{"id":35,"name":"web-server"},{"id":43,"name":"webassembly"},{"id":69,"name":"workflow"},{"id":87,"name":"yaml"}]" returns me the "expected json"