AI prompts

base on Replace Splunk in your small company with this one weird trick! # Automatically Download and Analyze Log Files from Remote Machines

This application is designed to collect and analyze logs from remote machines hosted on Amazon Web Services (AWS) and other cloud hosting services.

**Note**: This application was specifically designed for use with Pastel Network's log files. However, it can be easily adapted to work with any log files by modifying the parsing functions, data models, and specifying the location and names of the log files to be downloaded. It is compatible with log files stored in a standard format, where each entry is on a separate line and contains a timestamp, a log level, and a message. The application has been tested with log files several gigabytes in size from dozens of machines and can process all of it in minutes. It is designed for Ubuntu 22.04+, but can be adapted for other Linux distributions.

## Customization

To adapt this application for your own use case, refer to the included sample log files and compare them to the parsing functions in the code. You can also modify the data models to store log entries as desired.

## Features

The application consists of various Python scripts that perform the following functions:

* **Connect to Remote Machines**: Using the boto3 library for AWS instances and an Ansible inventory file for non-AWS instances, the application establishes SSH connections to each remote machine.

* **Download and Parse Log Files**: Downloads specified log files from each remote machine and parses them. The parsed log entries are then queued for database insertion.

* **Insert Log Entries into Database**: Uses SQLAlchemy to insert the parsed log entries from the queue into an SQLite database.

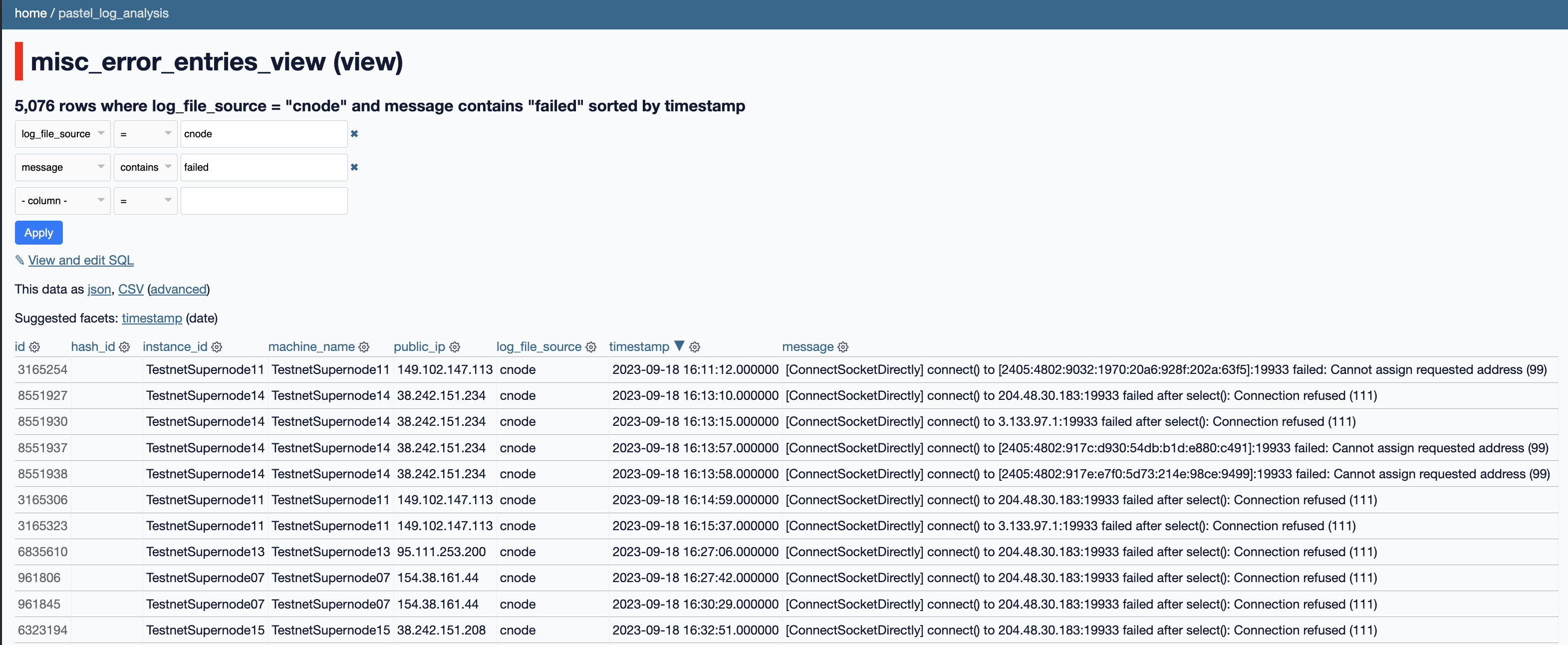

* **Process and Analyze Log Entries**: Processes and analyzes log entries stored in the database, offering functions to find error entries and create views of aggregated data based on specified criteria.

* **Generate Network Activity Data**: Fetches and processes network activity data from each remote machine.

* **Expose Database via Web App using Datasette**: Once the database is generated, it can be shared over the web using Datasette.

## Compatibility

The tool is compatible with both AWS-hosted instances and any list of Linux instances stored in a standard Ansible inventory file with the following structure:

```yaml

all:

vars:

ansible_connection: ssh

ansible_user: ubuntu

ansible_ssh_private_key_file: /path/to/ssh/key/file.pem

hosts:

MyCoolMachine01:

ansible_host: 1.2.3.41

MyCoolMachine02:

ansible_host: 1.2.3.19

```

(Both can be used seamlessly.)

## Warning

To simplify the code, the tool is designed to delete all downloaded log files and generated databases each time it runs. Consequently, this can consume significant bandwidth depending on your log files' size. However, the design's high level of parallel processing and concurrency allows it to run quickly, even when connecting to dozens of remote machines and downloading hundreds of log files.

## Usage

Designed for Ubuntu 22.04+, first install the requirements:

```bash

python3 -m venv venv

source venv/bin/activate

python3 -m pip install --upgrade pip

python3 -m pip install wheel

pip install -r requirements.txt

```

You will also need to install Redis:

```bash

sudo apt install redis -y

```

And install Datasette to expose the results as a website:

```bash

sudo apt install pipx -y && pipx ensurepath && pipx install datasette

```

To run the application every 30 minutes as a cron job, execute:

```bash

crontab -e

```

And add the following line:

```bash

*/30 * * * * . $HOME/.profile; /home/ubuntu/automatic_log_collector_and_analyzer/venv/bin/python /home/ubuntu/automatic_log_collector_and_analyzer/automatic_log_collector_and_analyzer.py >> /home/ubuntu/automatic_log_collector_and_analyzer/log_$(date +\%Y-\%m-\%dT\%H_\%M_\%S).log 2>&1

```

---

Thanks for your interest in my open-source project! I hope you find it useful. You might also find my commercial web apps useful, and I would really appreciate it if you checked them out:

**[YoutubeTranscriptOptimizer.com](https://youtubetranscriptoptimizer.com)** makes it really quick and easy to paste in a YouTube video URL and have it automatically generate not just a really accurate direct transcription, but also a super polished and beautifully formatted written document that can be used independently of the video.

The document basically sticks to the same material as discussed in the video, but it sounds much more like a real piece of writing and not just a transcript. It also lets you optionally generate quizzes based on the contents of the document, which can be either multiple choice or short-answer quizzes, and the multiple choice quizzes get turned into interactive HTML files that can be hosted and easily shared, where you can actually take the quiz and it will grade your answers and score the quiz for you.

**[FixMyDocuments.com](https://fixmydocuments.com/)** lets you submit any kind of document— PDFs (including scanned PDFs that require OCR), MS Word and Powerpoint files, images, audio files (mp3, m4a, etc.) —and turn them into highly optimized versions in nice markdown formatting, from which HTML and PDF versions are automatically generated. Once converted, you can also edit them directly in the site using the built-in markdown editor, where it saves a running revision history and regenerates the PDF/HTML versions.

In addition to just getting the optimized version of the document, you can also generate many other kinds of "derived documents" from the original: interactive multiple-choice quizzes that you can actually take and get graded on; slick looking presentation slides as PDF or HTML (using LaTeX and Reveal.js), an in-depth summary, a concept mind map (using Mermaid diagrams) and outline, custom lesson plans where you can select your target audience, a readability analysis and grade-level versions of your original document (good for simplifying concepts for students), Anki Flashcards that you can import directly into the Anki app or use on the site in a nice interface, and more.

", Assign "at most 3 tags" to the expected json: {"id":"2230","tags":[]} "only from the tags list I provide: [{"id":77,"name":"3d"},{"id":89,"name":"agent"},{"id":17,"name":"ai"},{"id":54,"name":"algorithm"},{"id":24,"name":"api"},{"id":44,"name":"authentication"},{"id":3,"name":"aws"},{"id":27,"name":"backend"},{"id":60,"name":"benchmark"},{"id":72,"name":"best-practices"},{"id":39,"name":"bitcoin"},{"id":37,"name":"blockchain"},{"id":1,"name":"blog"},{"id":45,"name":"bundler"},{"id":58,"name":"cache"},{"id":21,"name":"chat"},{"id":49,"name":"cicd"},{"id":4,"name":"cli"},{"id":64,"name":"cloud-native"},{"id":48,"name":"cms"},{"id":61,"name":"compiler"},{"id":68,"name":"containerization"},{"id":92,"name":"crm"},{"id":34,"name":"data"},{"id":47,"name":"database"},{"id":8,"name":"declarative-gui "},{"id":9,"name":"deploy-tool"},{"id":53,"name":"desktop-app"},{"id":6,"name":"dev-exp-lib"},{"id":59,"name":"dev-tool"},{"id":13,"name":"ecommerce"},{"id":26,"name":"editor"},{"id":66,"name":"emulator"},{"id":62,"name":"filesystem"},{"id":80,"name":"finance"},{"id":15,"name":"firmware"},{"id":73,"name":"for-fun"},{"id":2,"name":"framework"},{"id":11,"name":"frontend"},{"id":22,"name":"game"},{"id":81,"name":"game-engine "},{"id":23,"name":"graphql"},{"id":84,"name":"gui"},{"id":91,"name":"http"},{"id":5,"name":"http-client"},{"id":51,"name":"iac"},{"id":30,"name":"ide"},{"id":78,"name":"iot"},{"id":40,"name":"json"},{"id":83,"name":"julian"},{"id":38,"name":"k8s"},{"id":31,"name":"language"},{"id":10,"name":"learning-resource"},{"id":33,"name":"lib"},{"id":41,"name":"linter"},{"id":28,"name":"lms"},{"id":16,"name":"logging"},{"id":76,"name":"low-code"},{"id":90,"name":"message-queue"},{"id":42,"name":"mobile-app"},{"id":18,"name":"monitoring"},{"id":36,"name":"networking"},{"id":7,"name":"node-version"},{"id":55,"name":"nosql"},{"id":57,"name":"observability"},{"id":46,"name":"orm"},{"id":52,"name":"os"},{"id":14,"name":"parser"},{"id":74,"name":"react"},{"id":82,"name":"real-time"},{"id":56,"name":"robot"},{"id":65,"name":"runtime"},{"id":32,"name":"sdk"},{"id":71,"name":"search"},{"id":63,"name":"secrets"},{"id":25,"name":"security"},{"id":85,"name":"server"},{"id":86,"name":"serverless"},{"id":70,"name":"storage"},{"id":75,"name":"system-design"},{"id":79,"name":"terminal"},{"id":29,"name":"testing"},{"id":12,"name":"ui"},{"id":50,"name":"ux"},{"id":88,"name":"video"},{"id":20,"name":"web-app"},{"id":35,"name":"web-server"},{"id":43,"name":"webassembly"},{"id":69,"name":"workflow"},{"id":87,"name":"yaml"}]" returns me the "expected json"